A company has a set of EC2 Instances hosted in IAM. The EC2 Instances have EBS volumes which is used to store critical information. There is a business continuity requirement to ensure high availability for the EBS volumes. How can you achieve this?

A.

Use lifecycle policies for the EBS volumes

B.

Use EBS Snapshots

C.

Use EBS volume replication

D.

Use EBS volume encryption

Use EBS Snapshots

Explanation: Data stored in Amazon EBS volumes is redundantly stored in multiple physical locations as part of normal operation of those services and at no additional charge. However, Amazon EBS replication is stored within the same availability zone, not across multiple zones; therefore, it is highly recommended that you conduct regular snapshots to Amazon S3 for long-term data durability Option A is invalid because there is no lifecycle policy for EBS volumes Option C is invalid because there is no EBS volume replication Option D is invalid because EBS volume encryption will not ensure business continuity For information on security for Compute Resources, please visit the below URL:

https://d1.awsstatic.com/whitepapers/Security/Security_Compute_Services_Whitepaper.pdf

A company has retail stores The company is designing a solution to store scanned copies of customer receipts on Amazon S3 Files will be between 100 KB and 5 MB in PDF format Each retail store must have a unique encryption key Each object must be encrypted with a unique key. Which solution will meet these requirements?

A.

Create a dedicated AWS Key Management Service (AWS KMS) customer managed key for each retail store Use the S3 Put operation to upload the objects to Amazon S3 Specify server-side encryption with AWS KMS keys (SSE-KMS) and the key ID of the store's key

B.

Create a new AWS Key Management Service (AWS KMS) customer managed key every day for each retail store Use the KMS Encrypt operation to encrypt objects Then upload the objects to Amazon S3

C.

Run the AWS Key Management Service (AWS KMS) GenerateDataKey operation every day for each retail store Use the data key and client-side encryption to encrypt the objects Then upload the objects to Amazon S3

D.

Use the AWS Key Management Service (AWS KMS) ImportKeyMaterial operation to import new key material to AWS KMS every day for each retail store Use a customer managed key and the KMS Encrypt operation to encrypt the objects Then upload the objects to Amazon S3

Create a dedicated AWS Key Management Service (AWS KMS) customer managed key for each retail store Use the S3 Put operation to upload the objects to Amazon S3 Specify server-side encryption with AWS KMS keys (SSE-KMS) and the key ID of the store's key

Explanation: To meet the requirements of storing scanned copies of customer receipts on Amazon S3, where files will be between 100 KB and 5 MB in PDF format, each retail store must have a unique encryption key, and each object must be encrypted with a unique key, the most appropriate solution would be to create a dedicated AWS Key Management Service (AWS KMS) customer managed key for each retail store. Then, use the S3 Put operation to upload the objects to Amazon S3, specifying server-side encryption with AWS KMS keys (SSE-KMS) and the key ID of the store’s key.

References: : Amazon S3 - Amazon Web Services : AWS Key Management Service - Amazon Web Services : Amazon S3 - Amazon Web Services : AWS Key Management Service - Amazon Web Services

A company needs to store multiple years of financial records. The company wants to use Amazon S3 to store copies of these documents. The company must implement a solution to prevent the documents from being edited, replaced, or deleted for 7 years after the documents are stored in Amazon S3. The solution must also encrypt the documents at rest.

A security engineer creates a new S3 bucket to store the documents. What should the security engineer do next to meet these requirements?

A.

Configure S3 server-side encryption. Create an S3 bucket policy that has an explicit deny rule for all users for s3:DeleteObject and s3:PutObject API calls. Configure S3 Object Lock to use governance mode with a retention period of 7 years.

B.

Configure S3 server-side encryption. Configure S3 Versioning on the S3 bucket. Configure S3 Object Lock to use compliance mode with a retention period of 7 years.

C.

Configure S3 Versioning. Configure S3 Intelligent-Tiering on the S3 bucket to move the documents to S3 Glacier Deep Archive storage. Use S3 server-side encryption immediately. Expire the objects after 7 years.

D.

Set up S3 Event Notifications and use S3 server-side encryption. Configure S3 Event Notifications to target an AWS Lambda function that will review any S3 API call to the S3 bucket and deny the s3:DeleteObject and s3:PutObject API calls. Remove the S3 event notification after 7 years.

Configure S3 server-side encryption. Configure S3 Versioning on the S3 bucket. Configure S3 Object Lock to use compliance mode with a retention period of 7 years.

A company uses AWS Organizations to manage a small number of AWS accounts. However, the company plans to add 1 000 more accounts soon. The company allows only a centralized security team to create IAM roles for all AWS accounts and teams. Application teams submit requests for IAM roles to the security team. The security team has a backlog of IAM role requests and cannot review and provision the IAM roles quickly. The security team must create a process that will allow application teams to provision their own IAM roles. The process must also limit the scope of IAM roles and prevent privilege escalation. Which solution will meet these requirements with the LEAST operational overhead?

A.

Create an IAM group for each application team. Associate policies with each IAM group. Provision IAM users for each application team member. Add the new IAM users to the appropriate IAM group by using role-based access control (RBAC).

B.

Delegate application team leads to provision IAM rotes for each team. Conduct a quarterly review of the IAM rotes the team leads have provisioned. Ensure that the application team leads have the appropriate training to review IAM roles.

C.

Put each AWS account in its own OU. Add an SCP to each OU to grant access to only the AWS services that the teams plan to use. Include conditions tn the AWS account of each team.

D.

Create an SCP and a permissions boundary for IAM roles. Add the SCP to the root OU so that only roles that have the permissions boundary attached can create any new IAM roles.

Create an SCP and a permissions boundary for IAM roles. Add the SCP to the root OU so that only roles that have the permissions boundary attached can create any new IAM roles.

Explanation:

To create a process that will allow application teams to provision their own IAM roles, while limiting the scope of IAM roles and preventing privilege escalation, the following steps are required:

Create a service control policy (SCP) that defines the maximum permissions that can be granted to any IAM role in the organization. An SCP is a type of policy that you can use with AWS Organizations to manage permissions for all accounts in your organization. SCPs restrict permissions for entities in member accounts, including each AWS account root user, IAM users, and roles. For more information, see Service control policies overview.

Create a permissions boundary for IAM roles that matches the SCP. A permissions boundary is an advanced feature for using a managed policy to set the maximum permissions that an identity-based policy can grant to an IAM entity. A permissions boundary allows an entity to perform only the actions that are allowed by both its identity-based policies and its permissions boundaries. For more information, see Permissions boundaries for IAM entities.

Add the SCP to the root organizational unit (OU) so that it applies to all accounts in the organization. This will ensure that no IAM role can exceed the permissions defined by the SCP, regardless of how it is created or modified.

Instruct the application teams to attach the permissions boundary to any IAM role they create. This will prevent them from creating IAM roles that can escalate their own privileges or access resources they are not authorized to access.

This solution will meet the requirements with the least operational overhead, as it leverages AWS Organizations and IAM features to delegate and limit IAM role creation without requiring manual reviews or approvals.

The other options are incorrect because they either do not allow application teams to provision their own IAM roles (A), do not limit the scope of IAM roles or prevent privilege escalation (B), or do not take advantage of managed services whenever possible ©.

Verified References:

https://docs.aws.amazon.com/organizations/latest/userguide/orgs_manage_policies_scp.html

A company has a single AWS account and uses an Amazon EC2 instance to test application code. The company recently discovered that the instance was compromised. The instance was serving up malware. The analysis of the instance showed that the instance was compromised 35 days ago. A security engineer must implement a continuous monitoring solution that automatically notifies the company’s security team about compromised instances through an email distribution list for high severity findings. The security engineer must implement the solution as soon as possible. Which combination of steps should the security engineer take to meet these requirements? (Choose three.)

A.

Enable AWS Security Hub in the AWS account.

B.

Enable Amazon GuardDuty in the AWS account.

C.

Create an Amazon Simple Notification Service (Amazon SNS) topic. Subscribe the security team’s email distribution list to the topic.

D.

Create an Amazon Simple Queue Service (Amazon SQS) queue. Subscribe the security team’s email distribution list to the queue.

E.

Create an Amazon EventBridge (Amazon CloudWatch Events) rule for GuardDuty findings of high severity. Configure the rule to publish a message to the topic.

F.

Create an Amazon EventBridge (Amazon CloudWatch Events) rule for Security Hub findings of high severity. Configure the rule to publish a message to the queue.

Enable Amazon GuardDuty in the AWS account.

Create an Amazon Simple Notification Service (Amazon SNS) topic. Subscribe the security team’s email distribution list to the topic.

Create an Amazon EventBridge (Amazon CloudWatch Events) rule for GuardDuty findings of high severity. Configure the rule to publish a message to the topic.

A developer at a company uses an SSH key to access multiple Amazon EC2 instances. The company discovers that the SSH key has been posted on a public GitHub repository. A security engineer verifies that the key has not been used recently. How should the security engineer prevent unauthorized access to the EC2 instances?

A.

Delete the key pair from the EC2 console. Create a new key pair.

B.

Use the ModifylnstanceAttribute API operation to change the key on any EC2 instance that is using the key.

C.

Restrict SSH access in the security group to only known corporate IP addresses.

D.

Update the key pair in any AMI that is used to launch the EC2 instances. Restart the EC2 instances.

Restrict SSH access in the security group to only known corporate IP addresses.

Explanation: To prevent unauthorized access to the EC2 instances, the security engineer should do the following:

Restrict SSH access in the security group to only known corporate IP addresses. This allows the security engineer to use a virtual firewall that controls inbound and outbound traffic for their EC2 instances, and limit SSH access to only trusted sources.

A team is using AWS Secrets Manager to store an application database password. Only a limited number of IAM principals within the account can have access to the secret. The principals who require access to the secret change frequently. A security engineer must create a solution that maximizes flexibility and scalability. Which solution will meet these requirements?

A.

Use a role-based approach by creating an IAM role with an inline permissions policy that allows access to the secret. Update the IAM principals in the role trust policy as required.

B.

Deploy a VPC endpoint for Secrets Manager. Create and attach an endpoint policy that specifies the IAM principals that are allowed to access the secret. Update the list of IAM principals as required.

C.

Use a tag-based approach by attaching a resource policy to the secret. Apply tags to the secret and the IAM principals. Use the aws:PrincipalTag and aws:ResourceTag IAM condition keys to control access.

D.

Use a deny-by-default approach by using IAM policies to deny access to the secret explicitly. Attach the policies to an IAM group. Add all IAM principals to the IAM group. Remove principals from the group when they need access. Add the principals to the group again when access is no longer allowed.

Use a tag-based approach by attaching a resource policy to the secret. Apply tags to the secret and the IAM principals. Use the aws:PrincipalTag and aws:ResourceTag IAM condition keys to control access.

A security engineer is creating an AWS Lambda function. The Lambda function needs to use a role that is named LambdaAuditRole to assume a role that is named

AcmeAuditFactoryRole in a different AWS account. When the code is processed, the following error message appears: "An error oc-curred (AccessDenied) when calling the AssumeRole operation." Which combination of steps should the security engineer take to resolve this er-ror? (Select TWO.)

A.

Ensure that LambdaAuditRole has the sts:AssumeRole permission for AcmeAuditFactoryRole.

B.

Ensure that LambdaAuditRole has the AWSLambdaBasicExecutionRole managed policy attached.

C.

Ensure that the trust policy for AcmeAuditFactoryRole allows the sts:AssumeRole action from LambdaAuditRole.

D.

Ensure that the trust policy for LambdaAuditRole allows the sts:AssumeRole action from the lambda.amazonaws.com service.

E.

Ensure that the sts:AssumeRole API call is being issued to the us-east-I Region endpoint.

Ensure that LambdaAuditRole has the sts:AssumeRole permission for AcmeAuditFactoryRole.

Ensure that the trust policy for AcmeAuditFactoryRole allows the sts:AssumeRole action from LambdaAuditRole.

A company stores images for a website in an Amazon S3 bucket. The company is using Amazon CloudFront to serve the images to end users. The company recently discovered that the images are being accessed from countries where the company does not have a distribution license. Which actions should the company take to secure the images to limit their distribution? (Select TWO.)

A.

Update the S3 bucket policy to restrict access to a CloudFront origin access identity (OAI).

B.

Update the website DNS record to use an Amazon Route 53 geolocation record deny list of countries where the company lacks a license.

C.

Add a CloudFront geo restriction deny list of countries where the company lacks a license.

D.

Update the S3 bucket policy with a deny list of countries where the company lacks a license.

E.

Enable the Restrict Viewer Access option in CloudFront to create a deny list of countries where the company lacks a license.

Update the S3 bucket policy to restrict access to a CloudFront origin access identity (OAI).

Add a CloudFront geo restriction deny list of countries where the company lacks a license.

Explanation: To secure the images to limit their distribution, the company should take the following actions:

Update the S3 bucket policy to restrict access to a CloudFront origin access identity (OAI). This allows the company to use a special CloudFront user that can access objects in their S3 bucket, and prevent anyone else from accessing them directly.

Add a CloudFront geo restriction deny list of countries where the company lacks a license. This allows the company to use a feature that controls access to their content based on the geographic location of their viewers, and block requests from countries where they do not have a distribution license.

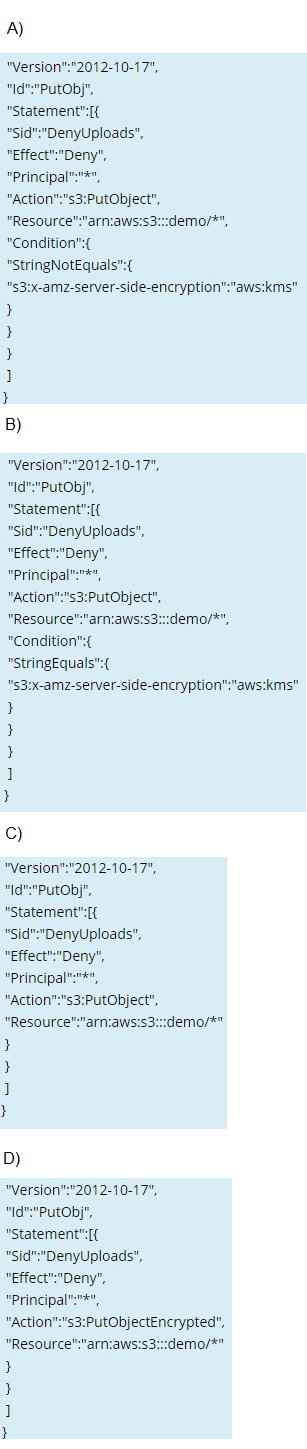

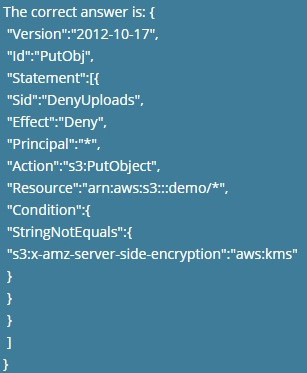

Which of the following bucket policies will ensure that objects being uploaded to a bucket called 'demo' are encrypted.

Please select:

A.

Option A

B.

Option B

C.

Option C

D.

Option D

Option A

Explanation: The condition of "s3:x-amz-server-side-encryption":"IAM:kms" ensures that objects uploaded need to be encrypted. Options B,C and D are invalid because you have to ensure the condition of ns3:x-amzserver-side-encryption":"IAM:kms" is present.

For more information on IAM KMS best practices, just browse to the below URL:

https://dl.IAMstatic.com/whitepapers/IAM-kms-best-praaices.pdf

A developer is building a serverless application hosted on AWS that uses Amazon Redshift as a data store The application has separate modules for readwrite and read-only functionality The modules need their own database users for compliance reasons Which combination of steps should a security engineer implement to grant appropriate access?

A.

Configure cluster security groups for each application module to control access to database users that are required for read-only and readwrite

B.

Configure a VPC endpoint for Amazon Redshift Configure an endpoint policy that maps database users to each application module, and allow access to the tables that are required for read-only and read/write

C.

Configure an 1AM policy for each module Specify the ARN of an Amazon Redshift database user that allows the GetClusterCredentials API call

D.

Create local database users for each module

E.

Configure an 1AM policy for each module Specify the ARN of an 1AM user that allows the GetClusterCredentials API call

Configure cluster security groups for each application module to control access to database users that are required for read-only and readwrite

Explanation: To grant appropriate access to separate modules for read-write and readonly functionality in a serverless application hosted on AWS that uses Amazon Redshift as a data store, a security engineer should configure cluster security groups for each application module to control access to database users that are required for read-only and readwrite, and configure an IAM policy for each module specifying the ARN of an IAM user that allows the GetClusterCredentials API call.

References: : Amazon Redshift - Amazon Web Services : Amazon Redshift - Amazon Web Services : AWS Identity and Access Management - AWS Management Console : AWS Identity and Access Management - AWS Management Console

A company has an AWS Lambda function that creates image thumbnails from larger images. The Lambda function needs read and write access to an Amazon S3 bucket in the same AWS account. Which solutions will provide the Lambda function this access? (Select TWO.)

A.

Create an IAM user that has only programmatic access. Create a new access key pair. Add environmental variables to the Lambda function with the ac-cess key ID and secret access key. Modify the Lambda function to use the environmental variables at run time during communication with Amazon S3.

B.

Generate an Amazon EC2 key pair. Store the private key in AWS Secrets Man-ager. Modify the Lambda function to retrieve the private key from Secrets Manager and to use the private key during communication with Amazon S3.

C.

Create an IAM role for the Lambda function. Attach an IAM policy that al-lows access to the S3 bucket.

D.

Create an IAM role for the Lambda function. Attach a bucket policy to the S3 bucket to allow access. Specify the function's IAM role as the princi-pal.

E.

Create a security group. Attach the security group to the Lambda function. Attach a bucket policy that allows access to the S3 bucket through the se-curity group ID.

Create an IAM role for the Lambda function. Attach an IAM policy that al-lows access to the S3 bucket.

Create an IAM role for the Lambda function. Attach a bucket policy to the S3 bucket to allow access. Specify the function's IAM role as the princi-pal.

| Page 13 out of 31 Pages |

| Previous |