Topic 3, Mix Questions

You use Azure Stream Analytics to receive Twitter data from Azure Event Hubs and to output the data to an Azure Blob storage account.

You need to output the count of tweets during the last five minutes every five minutes.

Each tweet must only

be counted once.

Which windowing function should you use?

A.

a five-minute Session window

B.

a five-minute Sliding window

C.

a five-minute Tumbling window

D.

a five-minute Hopping window that has one-minute hop

a five-minute Tumbling window

Explanation:

Tumbling window functions are used to segment a data stream into distinct time segments

and perform a function against them, such as the example below. The key differentiators of

a Tumbling window are that they repeat, do not overlap, and an event cannot belong to

more than one tumbling window.

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-window-functions

What should you recommend to prevent users outside the Litware on-premises network from accessing the analytical data store?

A

A.

a server-level virtual network rule

B.

a database-level virtual network rule

C.

a database-level firewall IP rule

D.

a server-level firewall IP rule

a server-level virtual network rule

Virtual network rules are one firewall security feature that controls whether the database

server for your single databases and elastic pool in Azure SQL Database or for your

databases in SQL Data Warehouse accepts communications that are sent from particular

subnets in virtual networks.

Server-level, not database-level: Each virtual network rule applies to your whole Azure SQL

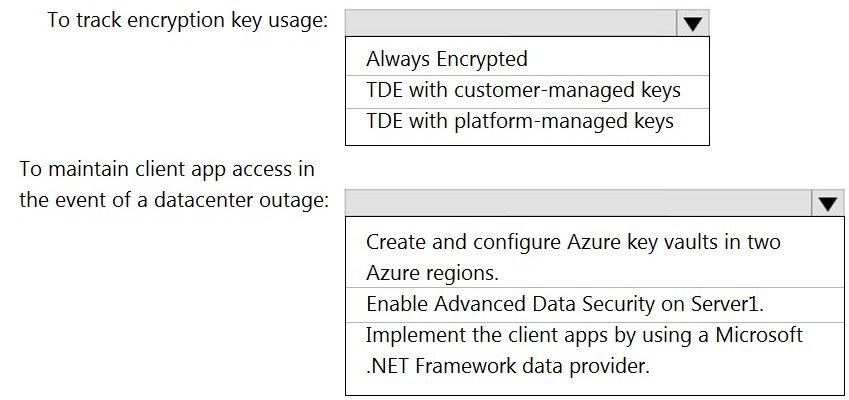

You have an Azure subscription that contains a logical Microsoft SQL server named

Server1. Server1 hosts an Azure Synapse Analytics SQL dedicated pool named Pool1.

You need to recommend a Transparent Data Encryption (TDE) solution for Server1. The

solution must meet the following requirements:

Track the usage of encryption keys.

Maintain the access of client apps to Pool1 in the event of an Azure datacenter

outage that affects the availability of the encryption keys.

What should you include in the recommendation? To answer, select the appropriate

options in the answer area.

NOTE: Each correct selection is worth one point.

You have an Azure Storage account and a data warehouse in Azure Synapse Analytics in

the UK South region.

You need to copy blob data from the storage account to the data warehouse by using

Azure Data Factory. The solution must meet the following requirements:

Ensure that the data remains in the UK South region at all times.

Minimize administrative effort.

Which type of integration runtime should you use?

A.

Azure integration runtime

B.

Azure-SSIS integration runtime

C.

Self-hosted integration runtime

Azure integration runtime

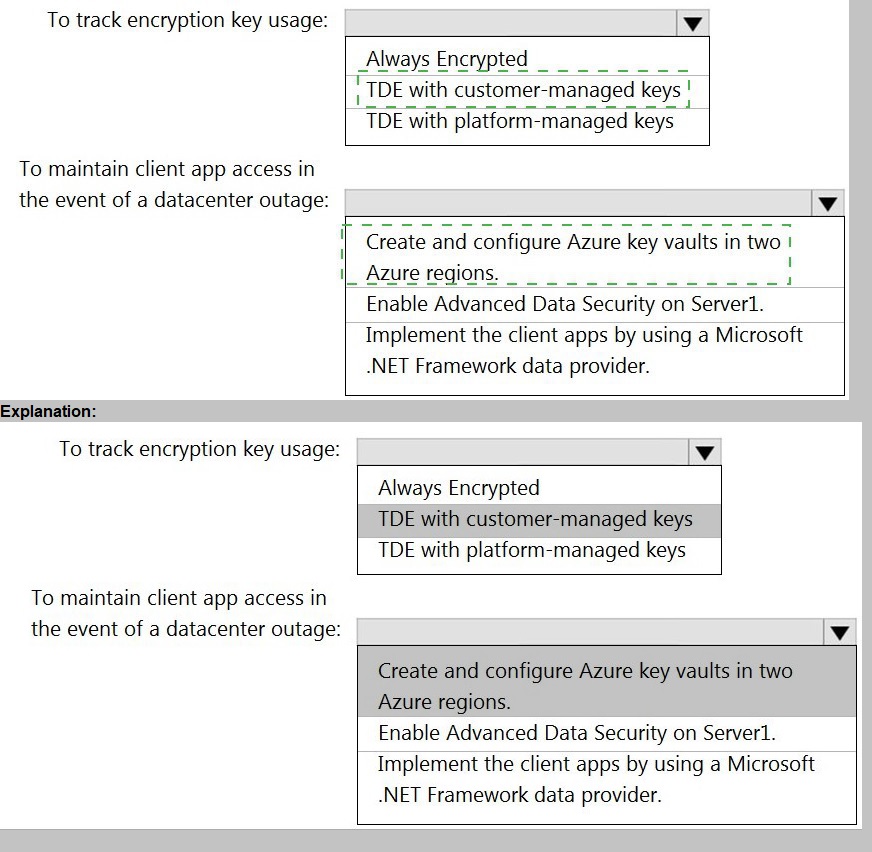

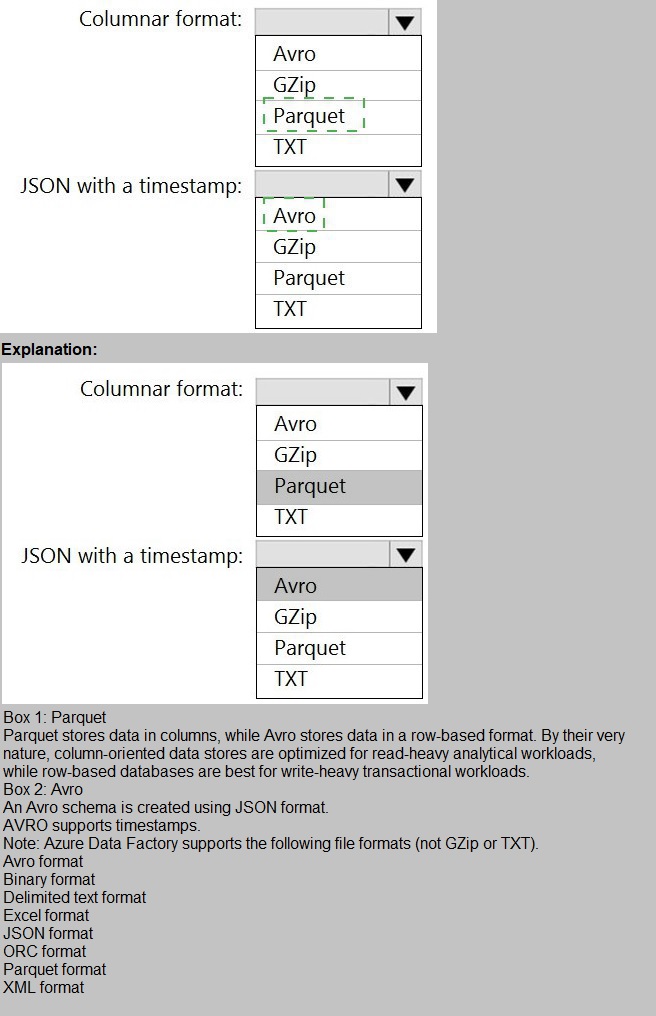

You need to output files from Azure Data Factory.

Which file format should you use for each type of output? To answer, select the appropriate

options in the answer area.

NOTE: Each correct selection is worth one point.

You are designing an enterprise data warehouse in Azure Synapse Analytics that will

contain a table named Customers. Customers will contain credit card information.

You need to recommend a solution to provide salespeople with the ability to view all the

entries in Customers.

The solution must prevent all the salespeople from viewing or inferring the credit card

information.

What should you include in the recommendation?

A.

data masking

B.

Always Encrypted

C.

column-level security

D.

row-level security

data masking

Explanation:

SQL Database dynamic data masking limits sensitive data exposure by masking it to nonprivileged

users.

The Credit card masking method exposes the last four digits of the designated fields and

adds a constant string as a prefix in the form of a credit card.

Example: XXXX-XXXX-XXXX-1234

Reference:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-dynamic-data-maskingget-

started

You need to design an Azure Synapse Analytics dedicated SQL pool that meets the following requirements:

Can return an employee record from a given point in time.

Maintains the latest employee information.

Minimizes query complexity.

How should you model the employee data?

A.

as a temporal table

B.

as a SQL graph table

C.

as a degenerate dimension table

D.

as a Type 2 slowly changing dimension (SCD) table

as a Type 2 slowly changing dimension (SCD) table

Explanation:

A Type 2 SCD supports versioning of dimension members. Often the source system

doesn't store versions, so the data warehouse load process detects and manages changes

in a dimension table. In this case, the dimension table must use a surrogate key to provide

a unique reference to a version of the dimension member. It also includes columns that

define the date range validity of the version (for example, StartDate and EndDate) and

possibly a flag column (for example, IsCurrent) to easily filter by current dimension

members.

Reference:

https://docs.microsoft.com/en-us/learn/modules/populate-slowly-changing-dimensionsazure-

synapse-analytics-pipelines/3-choose-between-dimension-types

You have an Azure Synapse Analytics serverless SQL pool named Pool1 and an Azure

Data Lake Storage Gen2 account named storage1. The AllowedBlobpublicAccess porperty

is disabled for storage1.

You need to create an external data source that can be used by Azure Active Directory

(Azure AD) users to access storage1 from Pool1.

What should you create first?

A.

an external resource pool

B.

a remote service binding

C.

database scoped credentials

D.

an external library

database scoped credentials

Note: This question is part of a series of questions that present the same scenario.

Each question in the series contains a unique solution that might meet the stated

goals. Some question sets might have more than one correct solution, while others

might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a

result, these questions will not appear in the review screen.

You plan to create an Azure Databricks workspace that has a tiered structure. The

workspace will contain the following three workloads:

A workload for data engineers who will use Python and SQL.

A workload for jobs that will run notebooks that use Python, Scala, and SOL.

A workload that data scientists will use to perform ad hoc analysis in Scala and R.

The enterprise architecture team at your company identifies the following standards for

Databricks environments:

The data engineers must share a cluster.

The job cluster will be managed by using a request process whereby data

scientists and data engineers provide packaged notebooks for deployment to the

cluster.

All the data scientists must be assigned their own cluster that terminates

automatically after 120 minutes of inactivity. Currently, there are three data

scientists.

You need to create the Databricks clusters for the workloads.

Solution: You create a High Concurrency cluster for each data scientist, a High

Concurrency cluster for the data engineers, and a Standard cluster for the jobs.

Does this meet the goal?

A.

Yes

B.

No

No

Explanation:

Need a High Concurrency cluster for the jobs.

Standard clusters are recommended for a single user. Standard can run workloads

developed in any language:

Python, R, Scala, and SQL.

A high concurrency cluster is a managed cloud resource. The key benefits of high

concurrency clusters are that

they provide Apache Spark-native fine-grained sharing for maximum resource utilization

and minimum query

latencies.

Reference:

https://docs.azuredatabricks.net/clusters/configure.html

You plan to implement an Azure Data Lake Storage Gen2 container that will contain CSV

files. The size of the files will vary based on the number of events that occur per hour.

File sizes range from 4.KB to 5 GB.

You need to ensure that the files stored in the container are optimized for batch processing.

What should you do?

A.

Compress the files.

B.

Merge the files.

C.

Convert the files to JSON

D.

Convert the files to Avro.

Convert the files to Avro.

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some

question sets might have more than one correct solution, while others might not have a

correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a

result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text

and numerical values. 75% of the rows contain description data that has an average length

of 1.1 MB.

You plan to copy the data from the storage account to an Azure SQL data warehouse.

You need to prepare the files to ensure that the data copies quickly.

Solution: You modify the files to ensure that each row is more than 1 MB.

Does this meet the goal?

A.

Yes

B.

No

No

Explanation:

Instead modify the files to ensure that each row is less than 1 MB.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

You plan to implement an Azure Data Lake Gen2 storage account.

You need to ensure that the data lake will remain available if a data center fails in the primary Azure region.

The solution must minimize costs.

Which type of replication should you use for the storage account?

A.

geo-redundant storage (GRS)

B.

zone-redundant storage (ZRS)

C.

locally-redundant storage (LRS)

D.

geo-zone-redundant storage (GZRS)

geo-redundant storage (GRS)

Explanation:

Geo-redundant storage (GRS) copies your data synchronously three times within a single

physical location in the primary region using LRS. It then copies your data asynchronously

to a single physical location in the secondary region.

Reference:

https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy

| Page 3 out of 18 Pages |

| Previous |