A company is running a custom-built application that processes records. All the

components run on Amazon EC2 instances that run in an Auto Scaling group. Each record's processing is a multistep sequential action that is compute-intensive. Each step is

always completed in 5 minutes or less.

A limitation of the current system is that if any steps fail, the application has to reprocess

the record from the beginning The company wants to update the architecture so that the

application must reprocess only the failed steps.

What is the MOST operationally efficient solution that meets these requirements?

A. Create a web application to write records to Amazon S3 Use S3 Event Notifications to publish to an Amazon Simple Notification Service (Amazon SNS) topic Use an EC2 instance to poll Amazon SNS and start processing Save intermediate results to Amazon S3 to pass on to the next step

B. Perform the processing steps by using logic in the application. Convert the application code to run in a container. Use AWS Fargate to manage the container Instances. Configure the container to invoke itself to pass the state from one step to the next.

C. Create a web application to pass records to an Amazon Kinesis data stream. Decouple the processing by using the Kinesis data stream and AWS Lambda functions.

D. Create a web application to pass records to AWS Step Functions. Decouple the processing into Step Functions tasks and AWS Lambda functions.

Explanation:

Use AWS Step Functions to Orchestrate Processing:

AWS Step Functions allow you to build distributed applications by combining AWS

Lambda functions or other AWS services into workflows.

Decoupling the processing into Step Functions tasks enables you to retry

individual steps without reprocessing the entire record.

Architectural Steps:

Create a web applicationto pass records to AWS Step Functions:

Define a Step Functions state machine:

Use AWS Lambda functions:

Operational Efficiency:

Using Step Functions and Lambda improves operational efficiency by providing

built-in error handling, retries, and state management.

This architecture scales automatically and isolates failures to individual steps,

ensuring only failed steps are retried.

A company wants to use AWS CloudFormation for infrastructure deployment. The

company has strict tagging and resource requirements and wants to limit the deployment to

two Regions. Developers will need to deploy multiple versions of the same application.

Which solution ensures resources are deployed in accordance with company policy?

A. Create AWS Trusted Advisor checks to find and remediate unapproved CloudFormation StackSets.

B. Create a Cloud Formation drift detection operation to find and remediate unapproved CloudFormation StackSets.

C. Create CloudFormation StackSets with approved CloudFormation templates.

D. Create AWS Service Catalog products with approved CloudFormation templates.

A company wants to set up a continuous delivery pipeline. The company stores application

code in a private GitHub repository. The company needs to deploy the application

components to Amazon Elastic Container Service (Amazon ECS). Amazon EC2, and AWS

Lambda. The pipeline must support manual approval actions.

Which solution will meet these requirements?

A. Use AWS CodePipeline with Amazon ECS. Amazon EC2, and Lambda as deploy providers.

B. Use AWS CodePipeline with AWS CodeDeploy as the deploy provider.

C. Use AWS CodePipeline with AWS Elastic Beanstalk as the deploy provider.

D. Use AWS CodeDeploy with GitHub integration to deploy the application.

A company is running an application on Amazon EC2 instances in an Auto Scaling group.

Recently an issue occurred that prevented EC2 instances from launching successfully and

it took several hours for the support team to discover the issue. The support team wants to

be notified by email whenever an EC2 instance does not start successfully.

Which action will accomplish this?

A. Add a health check to the Auto Scaling group to invoke an AWS Lambda function whenever an instance status is impaired.

B. Configure the Auto Scaling group to send a notification to an Amazon SNS topic whenever a failed instance launch occurs.

C. Create an Amazon CloudWatch alarm that invokes an AWS Lambda function when a failed Attachinstances Auto Scaling API call is made.

D. Create a status check alarm on Amazon EC2 to send a notification to an Amazon SNS topic whenever a status check fail occurs.

A DevOps engineer is designing an application that integrates with a legacy REST API.

The application has an AWS Lambda function that reads records from an Amazon Kinesis

data stream. The Lambda function sends the records to the legacy REST API.

Approximately 10% of the records that the Lambda function sends from the Kinesis data

stream have data errors and must be processed manually. The Lambda function event

source configuration has an Amazon Simple Queue Service (Amazon SQS) dead-letter

queue as an on-failure destination. The DevOps engineer has configured the Lambda

function to process records in batches and has implemented retries in case of failure.

During testing the DevOps engineer notices that the dead-letter queue contains many

records that have no data errors and that already have been processed by the legacy

REST API. The DevOps engineer needs to configure the Lambda function's event source

options to reduce the number of errorless records that are sent to the dead-letter queue.

Which solution will meet these requirements?

A. Increase the retry attempts

B. Configure the setting to split the batch when an error occurs

C. Increase the concurrent batches per shard

D. Decrease the maximum age of record

Explanation: This solution will meet the requirements because it will reduce the number of errorless records that are sent to the dead-letter queue. When you configure the setting to split the batch when an error occurs, Lambda will retry only the records that caused the error, instead of retrying the entire batch. This way, the records that have no data errors and have already been processed by the legacy REST API will not be retried and sent to the dead-letter queue unnecessarily.

A DevOps engineer has created an AWS CloudFormation template that deploys an

application on Amazon EC2 instances The EC2 instances run Amazon Linux The

application is deployed to the EC2 instances by using shell scripts that contain user data.

The EC2 instances have an 1AM instance profile that has an 1AM role with the

AmazonSSMManagedlnstanceCore managed policy attached

The DevOps engineer has modified the user data in the CloudFormation template to install

a new version of the application. The engineer has also applied the stack update. However,

the application was not updated on the running EC2 instances. The engineer needs to

ensure that the changes to the application are installed on the running EC2 instances.

Which combination of steps will meet these requirements? (Select TWO.)

A. Configure the user data content to use the Multipurpose Internet Mail Extensions (MIME) multipart format. Set the scripts-user parameter to always in the text/cloud-config section.

B. Refactor the user data commands to use the cfn-init helper script. Update the user data to install and configure the cfn-hup and cfn-mit helper scripts to monitor and apply the metadata changes

C. Configure an EC2 launch template for the EC2 instances. Create a new EC2 Auto Scaling group. Associate the Auto Scaling group with the EC2 launch template Use the AutoScalingScheduledAction update policy for the Auto Scaling group.

D. Refactor the user data commands to use an AWS Systems Manager document (SSM document). Add an AWS CLI command in the user data to use Systems Manager Run Command to apply the SSM document to the EC2 instances

E. Refactor the user data command to use an AWS Systems Manager document (SSM document) Use Systems Manager State Manager to create an association between the SSM document and the EC2 instances.

A company has a mobile application that makes HTTP API calls to an Application Load

Balancer (ALB). The ALB routes requests to an AWS Lambda function. Many different

versions of the application are in use at any given time, including versions that are in

testing by a subset of users. The version of the application is defined in the user-agent

header that is sent with all requests to the API.

After a series of recent changes to the API, the company has observed issues with the

application. The company needs to gather a metric for each API operation by response

code for each version of the application that is in use. A DevOps engineer has modified the

Lambda function to extract the API operation name, version information from the useragent

header and response code.

Which additional set of actions should the DevOps engineer take to gather the required

metrics?

A. Modify the Lambda function to write the API operation name, response code, and version number as a log line to an Amazon CloudWatch Logs log group. Configure a CloudWatch Logs metric filter that increments a metric for each API operation name. Specify response code and application version as dimensions for the metric.

B. Modify the Lambda function to write the API operation name, response code, and version number as a log line to an Amazon CloudWatch Logs log group. Configure a CloudWatch Logs Insights query to populate CloudWatch metrics from the log lines. Specify response code and application version as dimensions for the metric.

C. Configure the ALB access logs to write to an Amazon CloudWatch Logs log group. Modify the Lambda function to respond to the ALB with the API operation name, response code, and version number as response metadata. Configure a CloudWatch Logs metric filter that increments a metric for each API operation name. Specify response code and application version as dimensions for the metric.

D. Configure AWS X-Ray integration on the Lambda function. Modify the Lambda function to create an X-Ray subsegment with the API operation name, response code, and version number. Configure X-Ray insights to extract an aggregated metric for each API operation name and to publish the metric to Amazon CloudWatch. Specify response code and application version as dimensions for the metric.

Explanation: "Note that the metric filter is different from a log insights query, where the

experience is interactive and provides immediate search results for the user to investigate.

No automatic action can be invoked from an insights query. Metric filters, on the other

hand, will generate metric data in the form of a time series. This lets you create alarms that

integrate into your ITSM processes, execute AWS Lambda functions, or even create

anomaly detection models.

A company has multiple development groups working in a single shared AWS account. The

Senior Manager of the groups wants to be alerted via a third-party API call when the

creation of resources approaches the service limits for the account.

Which solution will accomplish this with the LEAST amount of development effort?

A. Create an Amazon CloudWatch Event rule that runs periodically and targets an AWS

Lambda function. Within the Lambda function, evaluate the current state of the AWS

environment and compare deployed resource values to resource limits on the account.

Notify the Senior Manager if the account is approaching a service limit.

B. Deploy an AWS Lambda function that refreshes AWS Trusted Advisor checks, and

configure an Amazon CloudWatch Events rule to run the Lambda function periodically.

Create another CloudWatch Events rule with an event pattern matching Trusted Advisor

events and a target Lambda function. In the target Lambda function, notify the Senior

Manager.

C. Deploy an AWS Lambda function that refreshes AWS Personal Health Dashboard checks, and configure an Amazon CloudWatch Events rule to run the Lambda function periodically. Create another CloudWatch Events rule with an event pattern matching Personal Health Dashboard events and a target Lambda function. In the target Lambda function, notify the Senior Manager.

D. Add an AWS Config custom rule that runs periodically, checks the AWS service limit status, and streams notifications to an Amazon SNS topic. Deploy an AWS Lambda function that notifies the Senior Manager, and subscribe the Lambda function to the SNS topic.

Explanation: To meet the requirements, the company needs to create a solution that alerts the Senior Manager when the creation of resources approaches the service limits for the account with the least amount of development effort. The company can use AWS Trusted Advisor, which is a service that provides best practice recommendations for cost optimization, performance, security, and service limits. The company can deploy an AWS Lambda function that refreshes Trusted Advisor checks, and configure an Amazon CloudWatch Events rule to run the Lambda function periodically. This will ensure that Trusted Advisor checks are up to date and reflect the current state of the account. The company can then create another CloudWatch Events rule with an event pattern matching Trusted Advisor events and a target Lambda function. The event pattern can filter for events related to service limit checks and their status. The target Lambda function can notify the Senior Manager via a third-party API call if the event indicates that the account is approaching or exceeding a service limit.

A company is using an AWS CodeBuild project to build and package an application. The

packages are copied to a shared Amazon S3 bucket before being deployed across multiple

AWS accounts.

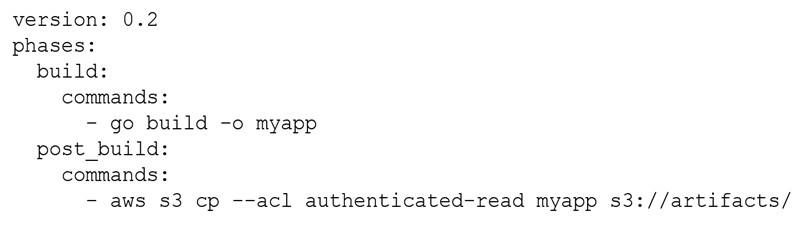

The buildspec.yml file contains the following:

The DevOps engineer has noticed that anybody with an AWS account is able to download

the artifacts.

What steps should the DevOps engineer take to stop this?

A. Modify the post_build command to use --acl public-read and configure a bucket policy that grants read access to the relevant AWS accounts only.

B. Configure a default ACL for the S3 bucket that defines the set of authenticated users as the relevant AWS accounts only and grants read-only access.

C. Create an S3 bucket policy that grants read access to the relevant AWS accounts and denies read access to the principal “*”.

D. Modify the post_build command to remove --acl authenticated-read and configure a bucket policy that allows read access to the relevant AWS accounts only.

Explanation: When setting the flag authenticated-read in the command line, the owner gets FULL_CONTROL. The AuthenticatedUsers group (Anyone with an AWS account) gets READ access.

A company manages a web application that runs on Amazon EC2 instances behind an

Application Load Balancer (ALB). The EC2 instances run in an Auto Scaling group across

multiple Availability Zones. The application uses an Amazon RDS for MySQL DB instance

to store the data. The company has configured Amazon Route 53 with an alias record that

points to the ALB.

A new company guideline requires a geographically isolated disaster recovery (DR> site

with an RTO of 4 hours and an RPO of 15 minutes.

Which DR strategy will meet these requirements with the LEAST change to the application

stack?

A. Launch a replica environment of everything except Amazon RDS in a different Availability Zone Create an RDS read replica in the new Availability Zone:and configure the new stack to point to the local RDS DB instance. Add the new stack to the Route 53 record set by using a hearth check to configure a failover routing policy.

B. Launch a replica environment of everything except Amazon RDS in a different AWS. Region Create an RDS read replica in the new Region and configure the new stack to point to the local RDS DB instance. Add the new stack to the Route 53 record set by using a health check to configure a latency routing policy.

C. Launch a replica environment of everything except Amazon RDS ma different AWS Region. In the event of an outage copy and restore the latest RDS snapshot from the primary. Region to the DR Region Adjust the Route 53 record set to point to the ALB in the DR Region.

D. Launch a replica environment of everything except Amazon RDS in a different AWS Region. Create an RDS read replica in the new Region and configure the new environment to point to the local RDS DB instance. Add the new stack to the Route 53 record set by using a health check to configure a failover routing policy. In the event of an outage promote the read replica to primary.

A company has developed an AWS Lambda function that handles orders received through

an API. The company is using AWS CodeDeploy to deploy the Lambda function as the final

stage of a CI/CD pipeline.

A DevOps engineer has noticed there are intermittent failures of the ordering API for a few

seconds after deployment. After some investigation the DevOps engineer believes the

failures are due to database changes not having fully propagated before the Lambda

function is invoked

How should the DevOps engineer overcome this?

A. Add a BeforeAllowTraffic hook to the AppSpec file that tests and waits for any necessary database changes before traffic can flow to the new version of the Lambda function.

B. Add an AfterAlIowTraffic hook to the AppSpec file that forces traffic to wait for any pending database changes before allowing the new version of the Lambda function to respond.

C. Add a BeforeAllowTraffic hook to the AppSpec file that tests and waits for any necessary database changes before deploying the new version of the Lambda function.

D. Add a validateServicehook to the AppSpec file that inspects incoming traffic and rejects the payload if dependent services such as the database are not yet ready.

A DevOps engineer is working on a data archival project that requires the migration of onpremises

data to an Amazon S3 bucket. The DevOps engineer develops a script that

incrementally archives on-premises data that is older than 1 month to Amazon S3. Data

that is transferred to Amazon S3 is deleted from the on-premises location The script uses

the S3 PutObject operation.

During a code review the DevOps engineer notices that the script does not verity whether

the data was successfully copied to Amazon S3. The DevOps engineer must update the

script to ensure that data is not corrupted during transmission. The script must use MD5

checksums to verify data integrity before the on-premises data is deleted.

Which solutions for the script will meet these requirements'? (Select TWO.)

A. Check the returned response for the Versioned Compare the returned Versioned against the MD5 checksum.

B. Include the MD5 checksum within the Content-MD5 parameter. Check the operation call's return status to find out if an error was returned.

C. Include the checksum digest within the tagging parameter as a URL query parameter.

D. Check the returned response for the ETag. Compare the returned ETag against the MD5 checksum.

E. Include the checksum digest within the Metadata parameter as a name-value pair After upload use the S3 HeadObject operation to retrieve metadata from the object.

| Page 9 out of 27 Pages |

| Previous |