Topic 3: Misc. Questions

You develop a custom question answering project in Azure Cognitive Service for

Language. The project will be used by a chatbot. You need to configure the project to

engage in multi-turn conversations. What should you do?

A.

Add follow-up prompts

B.

Enable active learning.

C.

Add alternate questions

D.

Enable chit-chat

Enable chit-chat

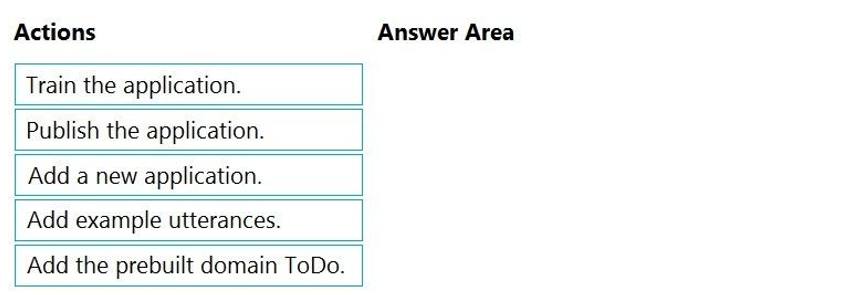

You plan to build a chatbot to support task tracking.

You create a Language Understanding service named lu1.

You need to build a Language Understanding model to integrate into the chatbot. The solution must minimize development time to build the model.

Which four actions should you perform in sequence? To answer, move the appropriate

actions from the list of actions to the answer area and arrange them in the correct order.

(Choose four.)

Explanation:

1. Add a new application

2. Add a prebuilt domain intent ToDo (it has already utterances so we can skip this step)

3. Train

4. Publish

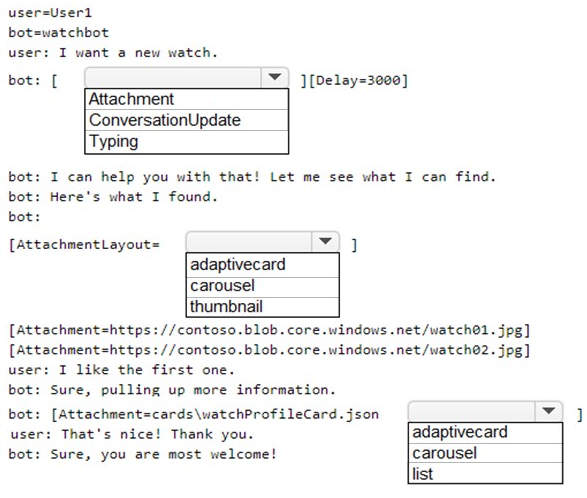

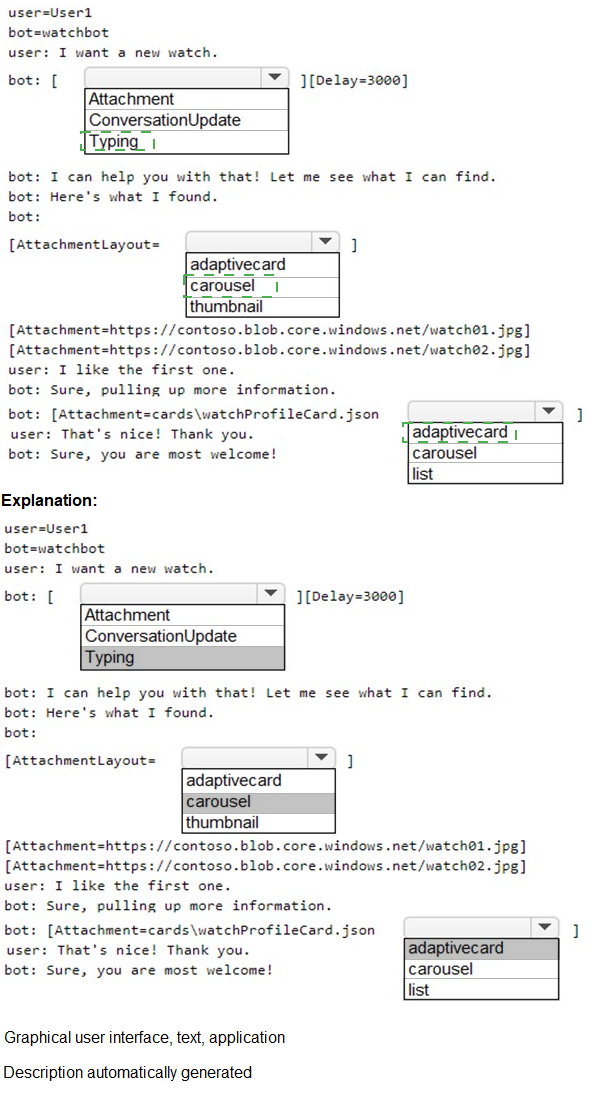

You are designing a conversation flow to be used in a chatbot.

You need to test the conversation flow by using the Microsoft Bot Framework Emulator.

How should you complete the .chat file? To answer, select the appropriate options in the

answer area.

NOTE: Each correct selection is worth one point.

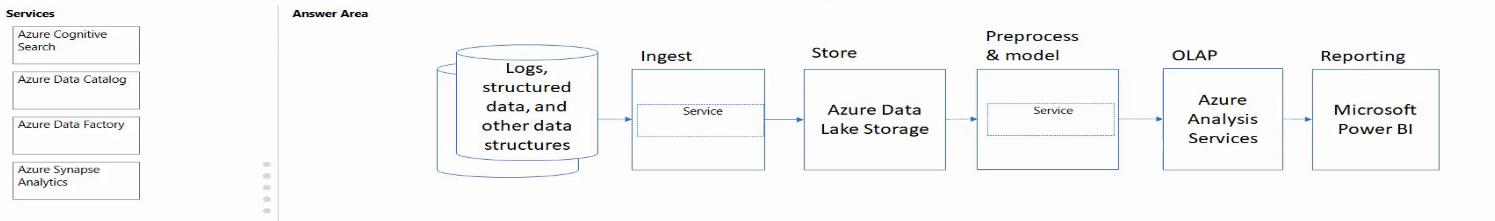

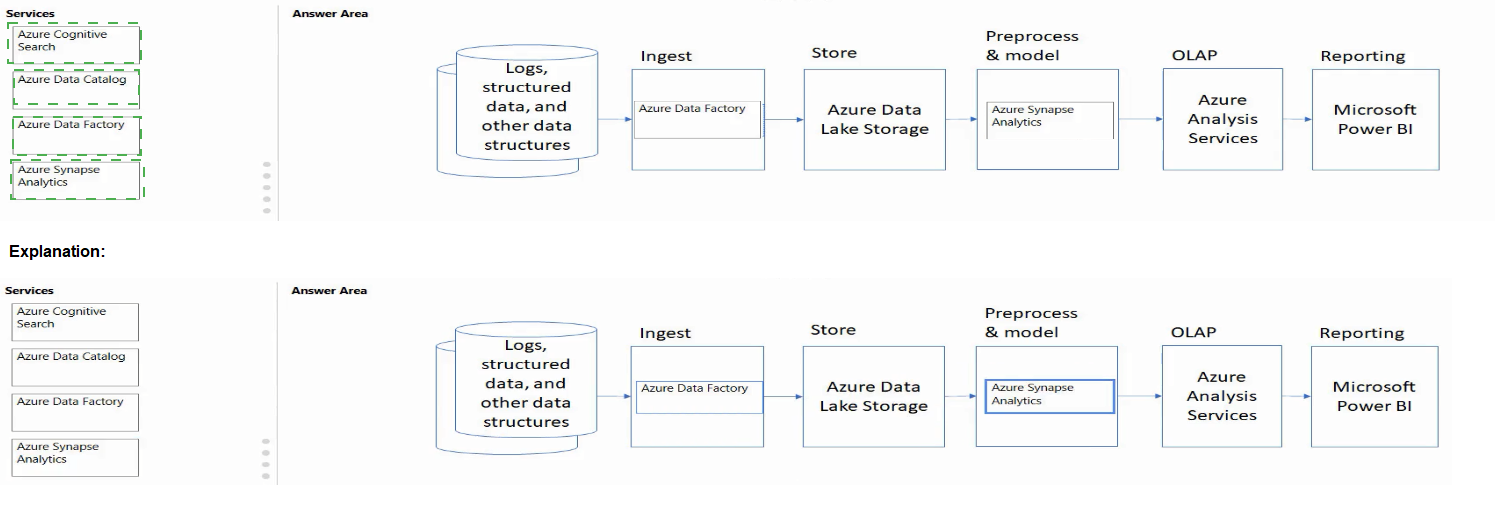

Match the Azure services to the appropriate locations in the architecture.

To answer, drag the appropriate service from the column on the left to its location on the

right. Each service may be used once, more than once, or not at all.

NOTE: Each correct match is worth one point.

What is used to define a query in a stream processing jobs in Azure Stream Analytics?

A.

SQL

B.

XML

C.

YAML

D.

KOL

SQL

You have a Language Understanding resource named lu1.

You build and deploy an Azure bot named bot1 that uses lu1.

You need to ensure that bot1 adheres to the Microsoft responsible AI principle of

inclusiveness.

How should you extend bot1?

A.

Implement authentication for bot1

B.

Enable active learning for Iu1

C.

Host Iu1 in a container

D.

Add Direct Line Speech to bot1.

Add Direct Line Speech to bot1.

Explanation:

Inclusiveness: AI systems should empower everyone and engage people.

Direct Line Speech is a robust, end-to-end solution for creating a flexible, extensible voice

assistant. It is powered by the Bot Framework and its Direct Line Speech channel, that is

optimized for voice-in, voice-out interaction with bots.

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/speech-service/direct-line-speech

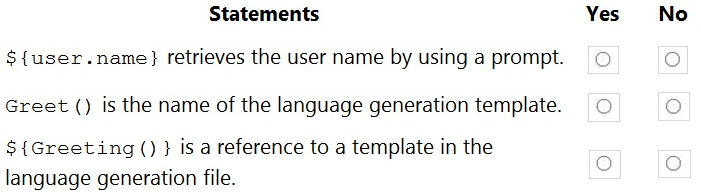

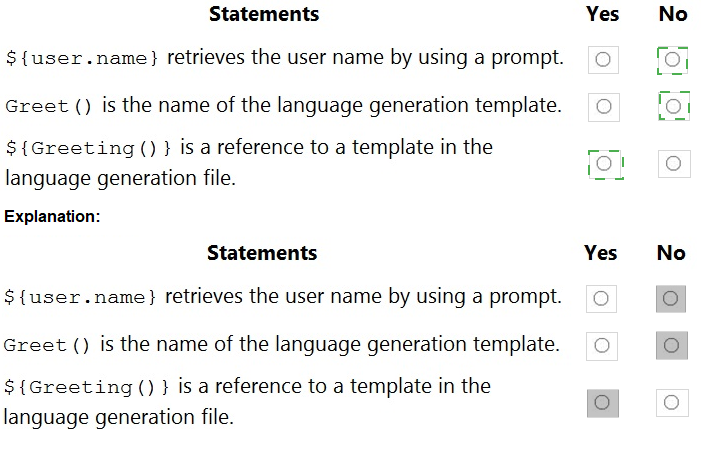

You are reviewing the design of a chatbot. The chatbot includes a language generation file

that contains the following fragment.

# Greet(user)

- ${Greeting()}, ${user.name}

For each of the following statements, select Yes if the statement is true. Otherwise, select

No.

NOTE: Each correct selection is worth one point.

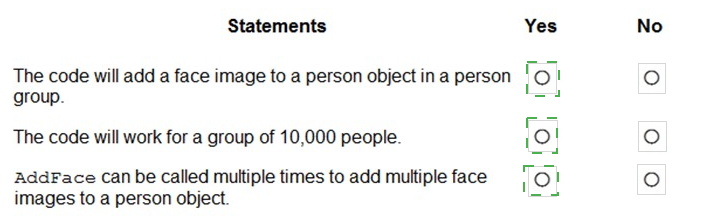

You are developing an application to recognize employees’ faces by using the Face

Recognition API. Images of the faces will be accessible from a URI endpoint.

The application has the following code.

Explanation:

A. True

B. True

C. True

B: see this example code from documentation that uses PersonGroup of size 10,000 :

https://docs.microsoft.com/en-us/azure/cognitive-services/face/face-api-how-to-topics/howto-

add-faces

the question wants to trick you into thinking you need to use a LargePersonGroup for a size

of 10,000 - but the documentation for it doesn't include this limitation or criteria:

https://docs.microsoft.com/en-us/azure/cognitive-services/face/face-api-how-to-topics/howto-

use-large-scale

You have the following data sources:

Finance: On-premises Microsoft SQL Server database

Sales: Azure Cosmos DB using the Core (SQL) API

Logs: Azure Table storage

HR: Azure SQL database

You need to ensure that you can search all the data by using the Azure Cognitive Search

REST API. What should you do?

A.

Configure multiple read replicas for the data in Sales.

B.

Mirror Finance to an Azure SQL database

C.

Migrate the data in Sales to the MongoDB API.

D.

Ingest the data in Logs into Azure Sentinel.

Mirror Finance to an Azure SQL database

Explanation:

On-premises Microsoft SQL Server database cannot be used as an index data source.

Note: Indexer in Azure Cognitive Search: : Automate aspects of an indexing operation by

configuring a data source and an indexer that you can schedule or run on demand. This

feature is supported for a limited number of data source types on Azure.

Indexers crawl data stores on Azure.

Azure Blob Storage

Azure Data Lake Storage Gen2 (in preview)

Azure Table Storage

Azure Cosmos DB

Azure SQL Database

SQL Managed Instance

SQL Server on Azure Virtual Machines

Reference:

https://docs.microsoft.com/en-us/azure/search/search-indexer-overview#supported-datasources

You need to build a chatbot that meets the following requirements:

Supports chit-chat, knowledge base, and multilingual models

Performs sentiment analysis on user messages

Selects the best language model automatically

What should you integrate into the chatbot?

A.

QnA Maker, Language Understanding, and Dispatch

B.

Translator, Speech, and Dispatch

C.

Language Understanding, Text Analytics, and QnA Maker

D.

Text Analytics, Translator, and Dispatch

Language Understanding, Text Analytics, and QnA Maker

Explanation:

Language Understanding: An AI service that allows users to interact with your applications,

bots, and IoT devices by using natural language.

QnA Maker is a cloud-based Natural Language Processing (NLP) service that allows you to

create a natural conversational layer over your data. It is used to find the most appropriate

answer for any input from your custom knowledge base (KB) of information.

Text Analytics: Mine insights in unstructured text using natural language processing

(NLP)—no machine learning expertise required. Gain a deeper understanding of customer

opinions with sentiment analysis. The Language Detection feature of the Azure Text

Analytics REST API evaluates text input

Reference:

https://azure.microsoft.com/en-us/services/cognitive-services/text-analytics/

https://docs.microsoft.com/en-us/azure/cognitive-services/qnamaker/overview/overview

You have a chatbot that was built by using the Microsoft Bot Framework. You need to

debug the chatbot endpoint remotely.

Which two tools should you install on a local computer? Each correct answer presents part

of the solution. (Choose two.)

NOTE: Each correct selection is worth one point.

A.

Fiddler

B.

Bot Framework Composer

C.

Bot Framework Emulator

D.

Bot Framework CLI

E.

ngrok

F.

nginx

Bot Framework Emulator

ngrok

Explanation:

Bot Framework Emulator is a desktop application that allows bot developers to test and

debug bots, either locally or remotely.

ngrok is a cross-platform application that "allows you to expose a web server running on

your local machine to the internet." Essentially, what we'll be doing is using ngrok to

forward messages from external channels on the web directly to our local machine to allow

debugging, as opposed to the standard messaging endpoint configured in the Azure portal.

Reference:

https://docs.microsoft.com/en-us/azure/bot-service/bot-service-debug-emulator

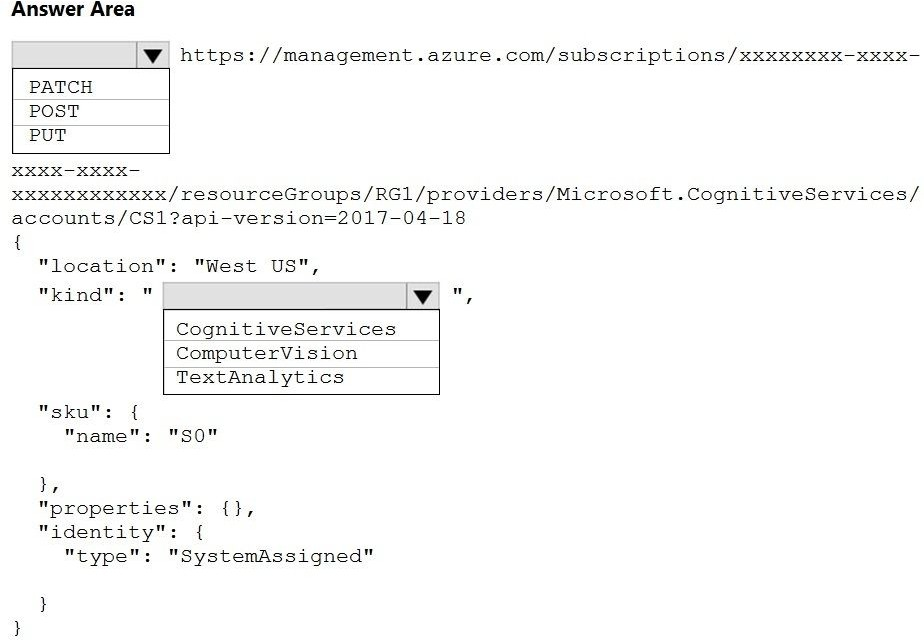

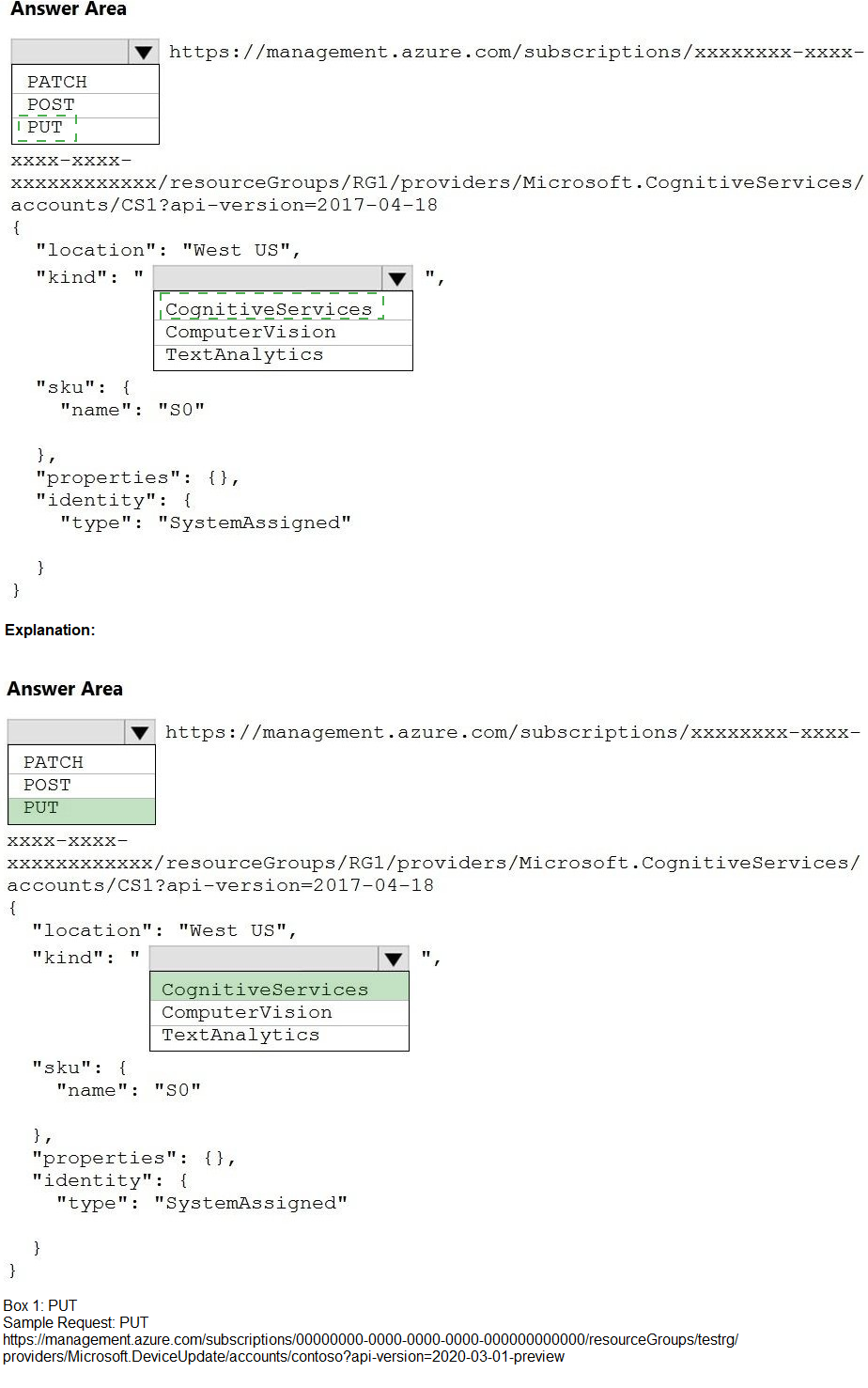

You need to create a new resource that will be used to perform sentiment analysis and

optical character recognition (OCR). The solution must meet the following requirements:

Use a single key and endpoint to access multiple services.

Consolidate billing for future services that you might use.

Support the use of Computer Vision in the future.

How should you complete the HTTP request to create the new resource? To answer, select

the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

| Page 3 out of 22 Pages |

| Previous |