Topic 2: Contoso, Ltd.Case Study

This is a case study Case studies are not timed separately. You can use as much exam

time as you would like to complete each case. However, there may be additional case

studies and sections on this exam. You must manage your time to ensure that you are able

to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information

that is provided in the case study. Case studies might contain exhibits and other resources

that provide more information about the scenario that is described in the case study. Each

question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review

your answers and to make changes before you move to the next section of the exam. After

you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the

left pane to explore the content of the case study before you answer the questions. Clicking

these buttons displays information such as business requirements, existing environment,

and problem statements. If the case study has an All Information tab. note that the

information displayed is identical to the information displayed on the subsequent tabs.

When you are ready to answer a question, click the Question button to return to the

question.

General Overview

Contoso, Ltd. is an international accounting company that has offices in France. Portugal,

and the United Kingdom. Contoso has a professional services department that contains the

roles shown in the following table.

• RBAC role assignments must use the principle of least privilege.

• RBAC roles must be assigned only to Azure Active Directory groups.

• Al solution responses must have a confidence score that is equal to or greater than 70

percent.

• When the response confidence score of an Al response is lower than 70 percent, the

response must be improved by human input.

Chatbot Requirements

Contoso identifies the following requirements for the chatbot:

• Provide customers with answers to the FAQs.

• Ensure that the customers can chat to a customer service agent.

• Ensure that the members of a group named Management-Accountants can approve the

FAQs.

• Ensure that the members of a group named Consultant-Accountants can create and

amend the FAQs.

• Ensure that the members of a group named the Agent-CustomerServices can browse the

FAQs.

• Ensure that access to the customer service agents is managed by using Omnichannel for

Customer Service.

• When the response confidence score is low. ensure that the chatbot can provide other

response options to the customers.

Document Processing Requirements

Contoso identifies the following requirements for document processing:

• The document processing solution must be able to process standardized financial

documents that have the following characteristics:

• Contain fewer than 20 pages.

• Be formatted as PDF or JPEG files.

• Have a distinct standard for each office.

• The document processing solution must be able to extract tables and text from the

financial documents.

• The document processing solution must be able to extract information from receipt

images.

• Members of a group named Management-Bookkeeper must define how to extract tables

from the financial documents.

• Members of a group named Consultant-Bookkeeper must be able to process the financial

documents.

Knowledgebase Requirements

Contoso identifies the following requirements for the knowledgebase:

• Supports searches for equivalent terms

• Can transcribe jargon with high accuracy

• Can search content in different formats, including video

• Provides relevant links to external resources for further research

You are developing the chatbot.

You create the following components:

• A QnA Maker resource

• A chatbot by using the Azure Bot Framework SDK

You need to add an additional component to meet the technical requirements and the

chatbot requirements. What should you add?

A.

Dispatch

B.

chatdown

C.

Language Understanding

D.

Microsoft Translator

Dispatch

Explanation:

Scenario: All planned projects must support English, French, and Portuguese.

If a bot uses multiple LUIS models and QnA Maker knowledge bases (knowledge bases),

you can use the Dispatch tool to determine which LUIS model or QnA Maker knowledge

base best matches the user input. The dispatch tool does this by creating a single LUIS

app to route user input to the correct model.

Reference:

https://docs.microsoft.com/en-us/azure/bot-service/bot-builder-tutorial-dispatch

You are developing the chatbot.

You create the following components:

* A QnA Maker resource

* A chatbot by using the Azure Bot Framework SDK.

You need to integrate the components to meet the chatbot requirements.

Which property should you use?

A.

QnADialogResponseOptions.CardNoMatchText

B.

Qna MakerOptions-ScoreThreshold

C.

Qna Maker Op t ions StrickFilters

D.

QnaMakerOptions.RankerType

QnaMakerOptions.RankerType

Explanation:

Scenario: When the response confidence score is low, ensure that the chatbot can provide

other response options to the customers.

When no good match is found by the ranker, the confidence score of 0.0 or "None" is

returned and the default response is "No good match found in the KB". You can override

this default response in the bot or application code calling the endpoint. Alternately, you

can also set the override response in Azure and this changes the default for all knowledge

bases deployed in a particular QnA Maker service.

Choosing Ranker type: By default, QnA Maker searches through questions and answers. If

you want to search through questions only, to generate an answer, use the

RankerType=QuestionOnly in the POST body of the GenerateAnswer request.

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/qnamaker/concepts/bestpractices

You are developing the knowledgebase by using Azure Cognitive Search.

You need to meet the knowledgebase requirements for searching equivalent terms.

What should you include in the solution?

A.

synonym map

B.

a suggester

C.

a custom analyzer

D.

a built-in key phrase extraction skill

synonym map

Explanation:

Within a search service, synonym maps are a global resource that associate equivalent

terms, expanding the scope of a query without the user having to actually provide the term.

For example, assuming "dog", "canine", and "puppy" are mapped synonyms, a query on

"canine" will match on a document containing "dog".

Create synonyms: A synonym map is an asset that can be created once and used by many

indexes.

Reference:

https://docs.microsoft.com/en-us/azure/search/search-synonyms

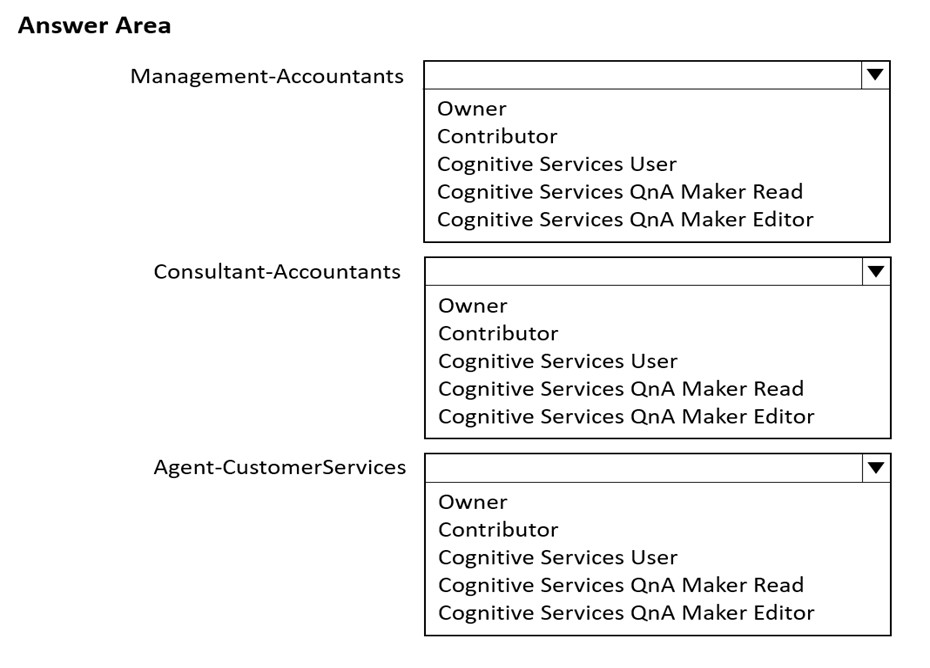

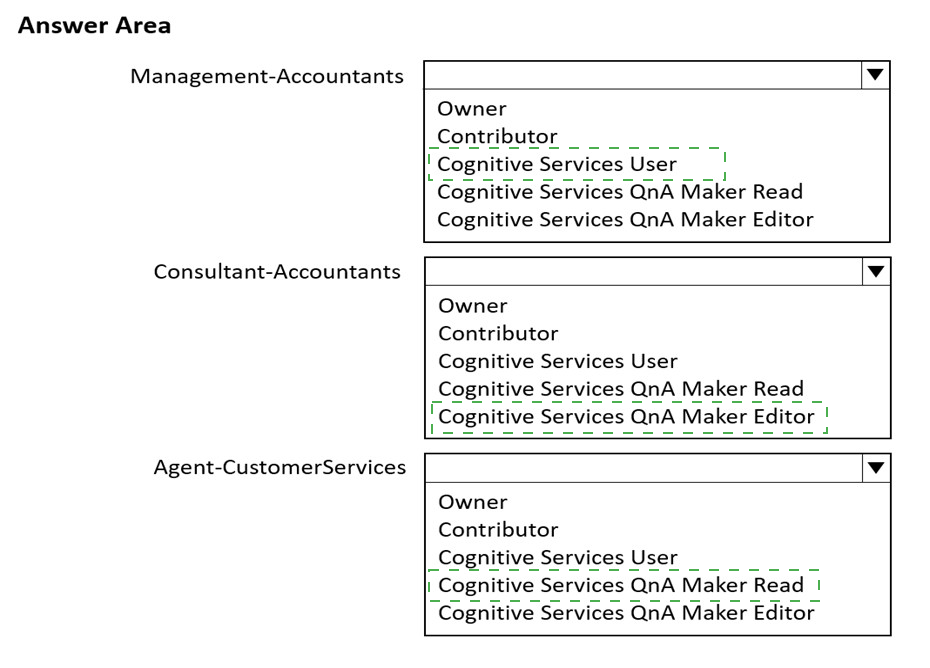

You build a QnA Maker resource to meet the chatbot requirements.

Which RBAC role should you assign to each group? To answer, select the appropriate

options in the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Cognitive Service User

Ensure that the members of a group named Management-Accountants can approve the

FAQs.

Approve=publish.

Cognitive Service User (read/write/publish): API permissions: All access to Cognitive

Services resource except for ability to:

1. Add new members to roles.

2. Create new resources.

Box 2: Cognitive Services QnA Maker Editor

Ensure that the members of a group named Consultant-Accountants can create and

amend the FAQs.

QnA Maker Editor: API permissions:

1. Create KB API

2. Update KB API

3. Replace KB API

4. Replace Alterations

5. "Train API" [in new service model v5]

Box 3: Cognitive Services QnA Maker Read

Ensure that the members of a group named the Agent-CustomerServices can browse the

FAQs.

QnA Maker Read: API Permissions:

1. Download KB API

2. List KBs for user API

3. Get Knowledge base details

4. Download Alterations

Generate Answer

You build a Language Understanding model by using the Language Understanding portal.

You export the model as a JSON file as shown in the following sample.

To what does the Weather.Historic entity correspond in the utterance?

A.

by month

B.

chicago

C.

rain

D.

location

by month

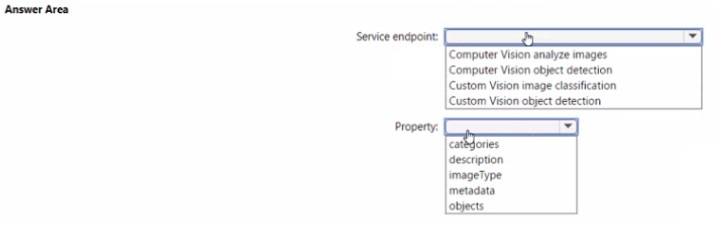

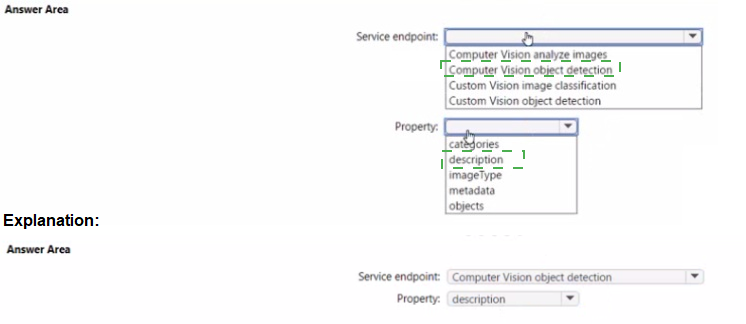

You have a library that contains thousands of images.

You need to tag the images as photographs, drawings, or clipart.

Which service endpoint and response property should you use? To answer, select the

appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

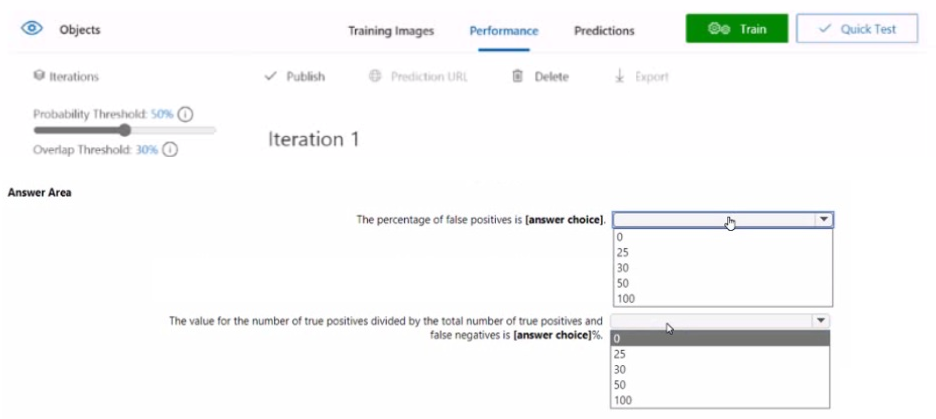

You are building a model to detect objects in images.

The performance of the model based on training data is shown in the following exhibit.

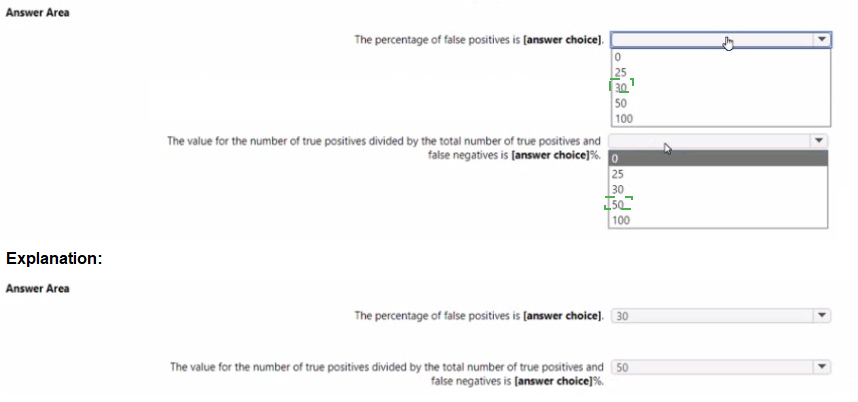

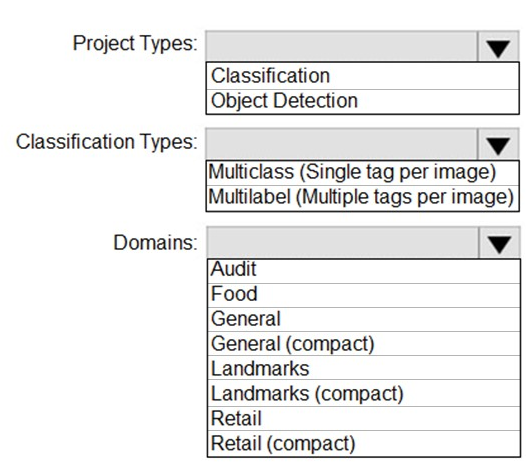

You are building a model that will be used in an iOS app.

You have images of cats and dogs. Each image contains either a cat or a dog.

You need to use the Custom Vision service to detect whether the images is of a cat or a

dog.

How should you configure the project in the Custom Vision portal? To answer, select the

appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Graphical user

interface, text, application, email

Description automatically generated

Box 1: Classification

Box 2: Multiclass

A multiclass classification project is for classifying images into a set of tags, or target

labels. An image can be assigned to one tag only.

Box 3: General

General: Optimized for a broad range of image classification tasks. If none of the other

specific domains are appropriate, or if you're unsure of which domain to choose, select one

of the General domains.

You are building a multilingual chatbot.

You need to send a different answer for positive and negative messages.

Which two Text Analytics APIs should you use? Each correct answer presents part of the

solution. (Choose two.)

NOTE: Each correct selection is worth one point.

A.

Linked entities from a well-known knowledge base

B.

Sentiment Analysis

C.

Key Phrases

D.

Detect Language

E.

Named Entity Recognition

Sentiment Analysis

Detect Language

Explanation:

B: The Text Analytics API's Sentiment Analysis feature provides two ways for detecting

positive and negative sentiment. If you send a Sentiment Analysis request, the API will

return sentiment labels (such as "negative", "neutral" and "positive") and confidence scores

at the sentence and document-level.

D: The Language Detection feature of the Azure Text Analytics REST API evaluates text

input for each document and returns language identifiers with a score that indicates the

strength of the analysis.

This capability is useful for content stores that collect arbitrary text, where language is

unknown. Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/text-analytics/how-tos/textanalytics-

how-to- sentiment-analysis?tabs=version-3-1

https://docs.microsoft.com/en-us/azure/cognitive-services/text-analytics/how-tos/textanalytics-

how-to- language-detection

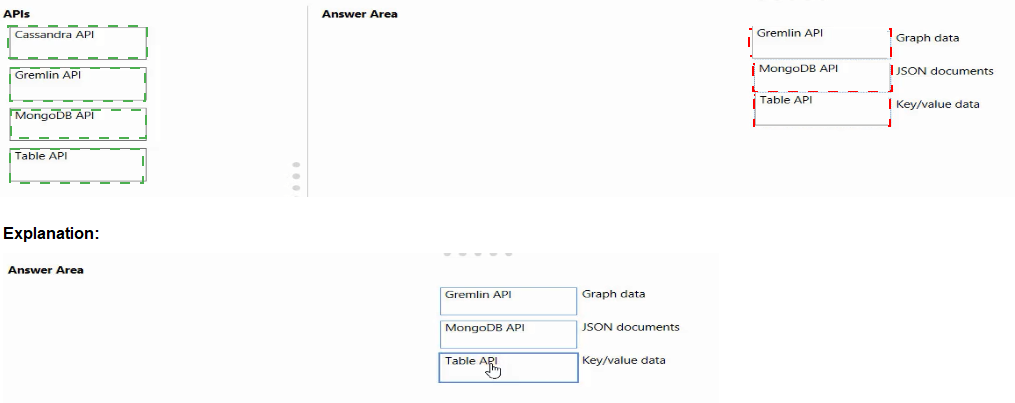

Match the Azure Cosmos DB APIs to the appropriate data structures.

To answer, drag the appropriate API from the column on the left to its data structure on the

right. Each API may be used once, more than once, or not at all.

NOTE: Each correct match is worth one point.

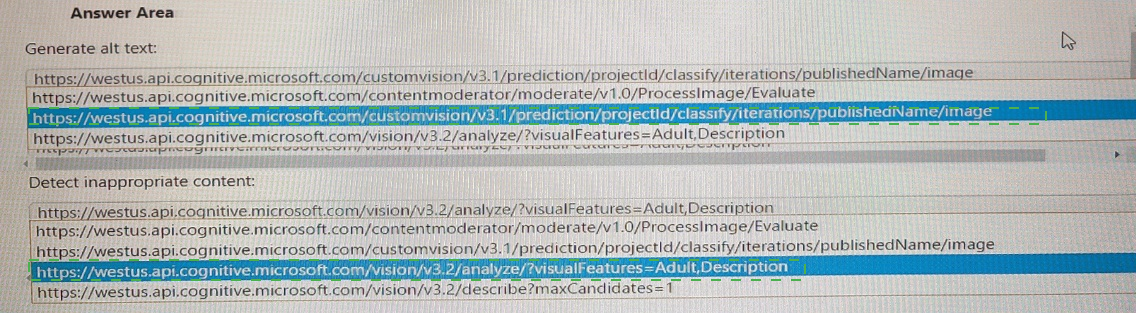

You are building an app that will enable users to upload images. The solution must meet

the following requirements:

• Automatically suggest alt text for the images.

• Detect inappropriate images and block them.

• Minimize development effort.

You need to recommend a computer vision endpoint for each requirement.

What should you recommend? To answer, select the appropriate options in the answer

area.

NOTE: Each correct selection is worth one point.

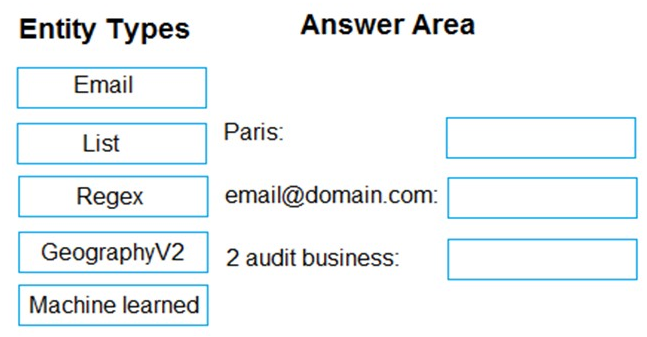

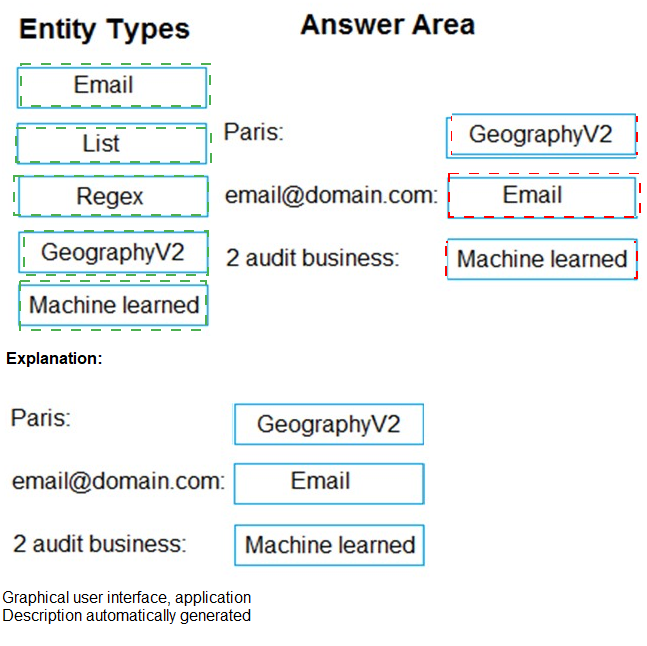

You are building a Language Understanding model for purchasing tickets.

You have the following utterance for an intent named PurchaseAndSendTickets.

Purchase [2 audit business] tickets to [Paris] [next Monday] and send tickets to

[email@domain.com]

You need to select the entity types. The solution must use built-in entity types to minimize

training data whenever possible.

Which entity type should you use for each label? To answer, drag the appropriate entity

types to the correct labels. Each entity type may be used once, more than once, or not at

all.

You may need to drag the split bar between panes or scroll to view content.

Box 1: GeographyV2

The prebuilt geographyV2 entity detects places. Because this entity is already trained, you

do not need to add example utterances containing GeographyV2 to the application intents.

Box 2: Email

Email prebuilt entity for a LUIS app: Email extraction includes the entire email address from

an utterance. Because this entity is already trained, you do not need to add example

utterances containing email to the application intents.

Box 3: Machine learned

The machine-learning entity is the preferred entity for building LUIS applications.

| Page 2 out of 22 Pages |

| Previous |